Differentiable Window for Dynamic Local Attention

Published in ACL 2020 - The 58th Annual Meeting of the Association for Computational Linguistics, 2020

Recommended citation: Xuan-Phi Nguyen*, Thanh-Tung Nguyen*, Shafiq Joty & Xiaoli Li (2020). Differentiable Window for Dynamic Local Attention. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics.

Paper Link: https://www.aclweb.org/anthology/2020.acl-main.589/

Abstract

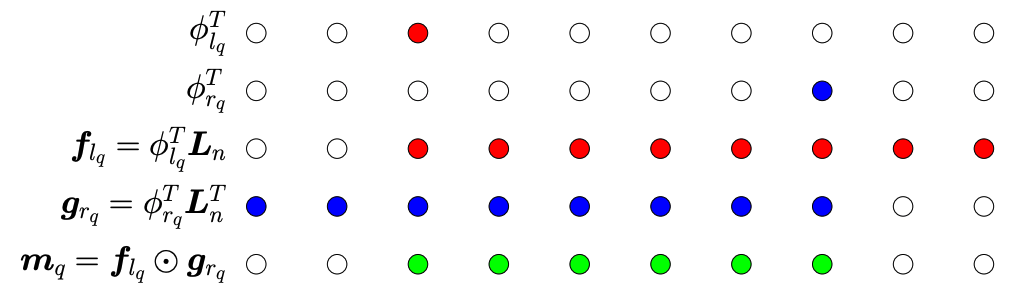

We propose Differentiable Window, a new neural module and general purpose component for dynamic window selection. While universally applicable, we demonstrate a compelling use case of utilizing Differentiable Window to improve standard attention modules by enabling more focused attentions over the input regions. We propose two variants of Differentiable Window, and integrate them within the Transformer architecture in two novel ways. We evaluate our proposed approach on a myriad of NLP tasks, including machine translation, sentiment analysis, subject-verb agreement and language modeling. Our experimental results demonstrate consistent and sizable improvements across all tasks.

Summary