Enhancing Attention with Explicit Phrasal Alignments

Published in arXiv preprint, 2019

Recommended citation: Xuan-Phi Nguyen, Shafiq Joty, Thank-Tung Nguyen (2019). Enhancing Attention with Explicit Phrasal Alignments.

Paper Link: https://openreview.net/forum?id=BygPq6VFvS

Abstract

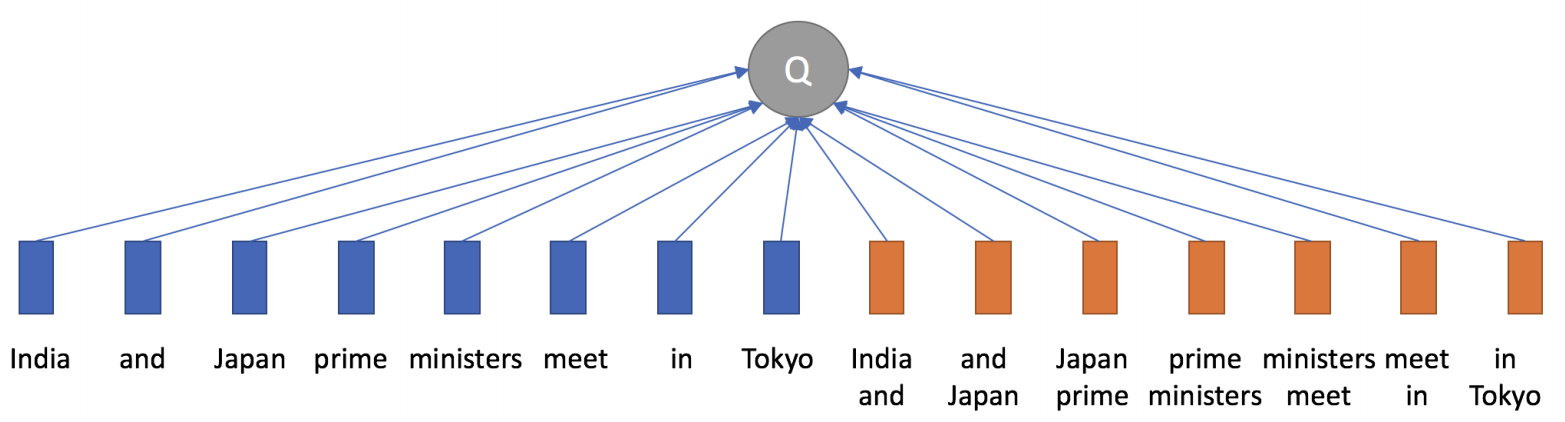

The attention mechanism is an indispensable component of any state-of-the-art neural machine translation system. However, existing attention methods are often token-based and ignore the importance of phrasal alignments, which are the backbone of phrase-based statistical machine translation. We propose a novel phrase-based attention method to model n-grams of tokens as the basic attention entities, and design multi-headed phrasal attentions within the Transformer architecture to perform token-to-token and token-to-phrase mappings. Our approach yields improvements in English-German, English-Russian and English-French translation tasks on the standard WMT'14 test set. Furthermore, our phrasal attention method shows improvements on the one-billion-word language modeling benchmark.

Summary