Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

About me

Awards

About me

Contributions

This is a page not in th emain menu

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Short description of portfolio item number 1

Short description of portfolio item number 2

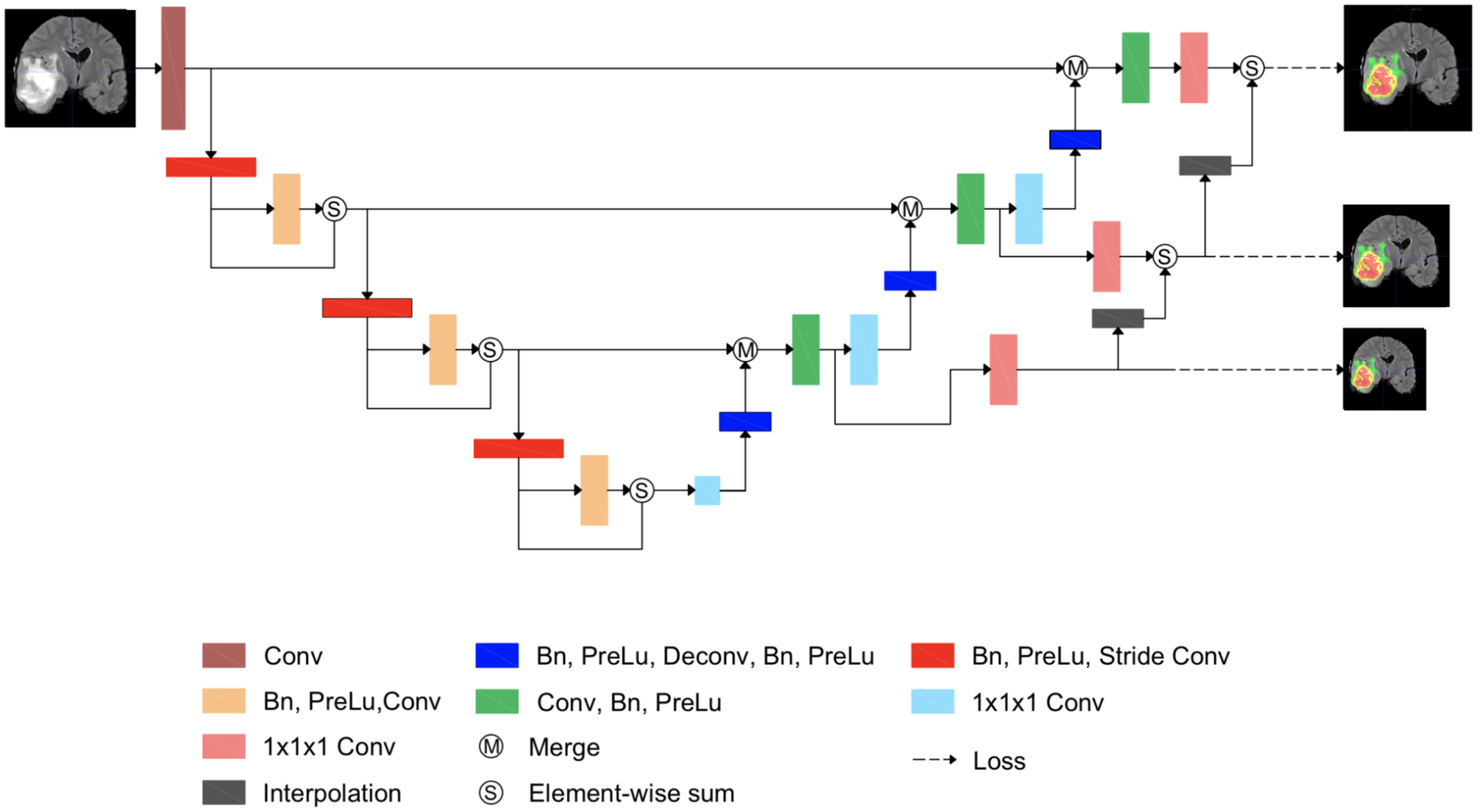

2019 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), 2018

Traditional U-Net models suffer from gradient vanishing under certain circumstances, such as detecting the existence of tumors in the brain. We introduce a novel Stochastic Aggregated Loss that improves the gradient flows of U-Net and performance.

Citation: P. X. Nguyen, Z. Lu, W. Huang, S. Huang, A. Katsuki & Z. Lin (2019).Medical image segmentation with stochastic aggregated loss in a unified U-Net. In 2019 IEEE EMBS International Conference on Biomedical Health Informatics (BHI) (IEEE BHI 2019), Chicago, USA.

Paper Link: https://ieeexplore.ieee.org/document/8834667

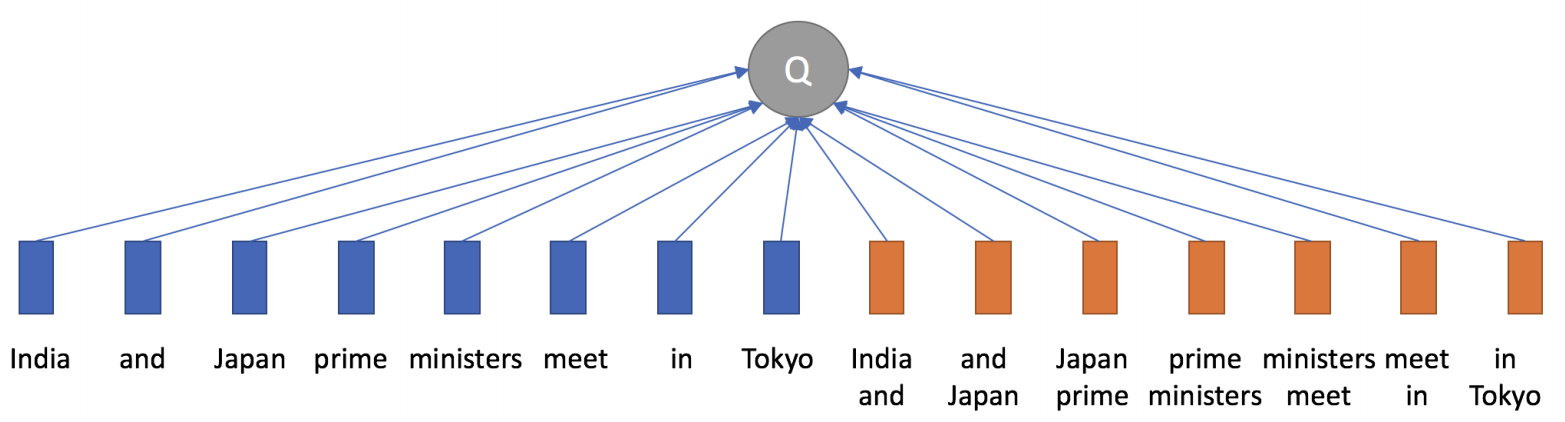

arXiv preprint, 2019

A new attention mechanism that considers phrases as attention entities instead of individual tokens in MT.

Citation: Xuan-Phi Nguyen, Shafiq Joty, Thank-Tung Nguyen (2019). Enhancing Attention with Explicit Phrasal Alignments.

Paper Link: https://openreview.net/forum?id=BygPq6VFvS

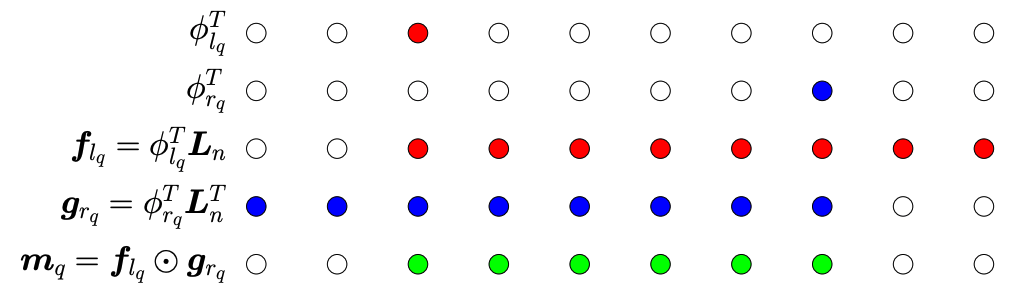

ACL 2020 - The 58th Annual Meeting of the Association for Computational Linguistics, 2020

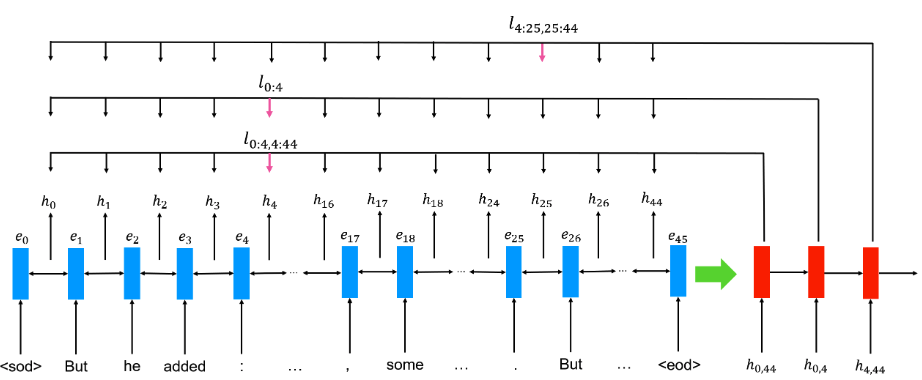

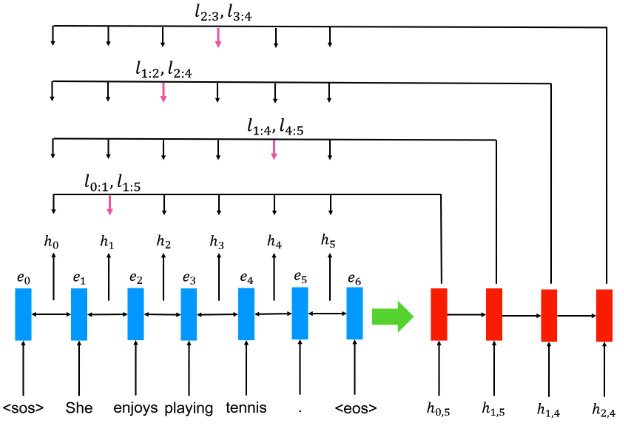

Using differentiable windows to perform local attentions greatly improve performance of machine translation and language modeling.

Citation: Xuan-Phi Nguyen*, Thanh-Tung Nguyen*, Shafiq Joty & Xiaoli Li (2020). Differentiable Window for Dynamic Local Attention. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics.

Paper Link: https://www.aclweb.org/anthology/2020.acl-main.589/

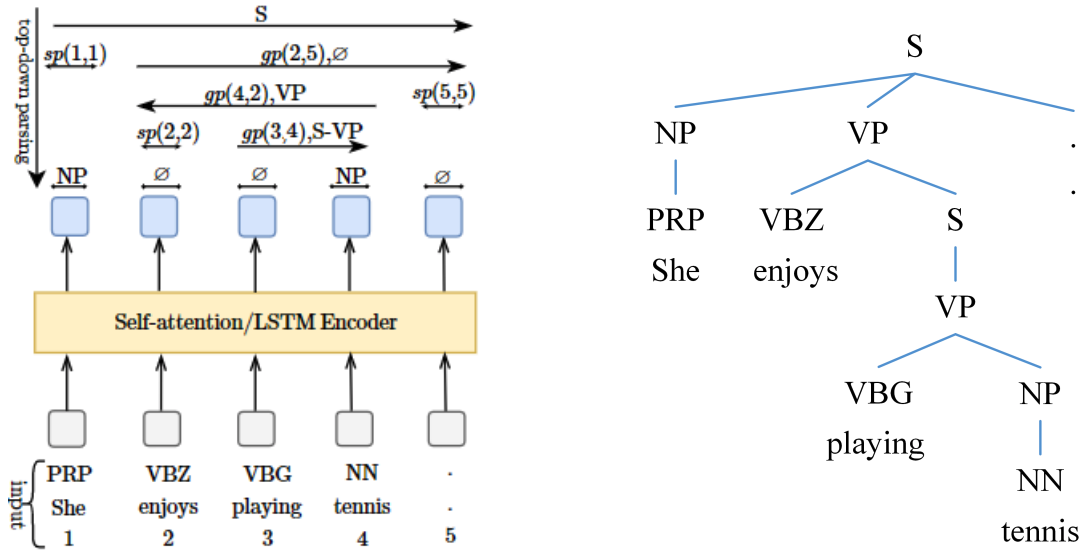

ACL 2020 - The 58th Annual Meeting of the Association for Computational Linguistics, 2020

A new parsing method that employs pointing mechanism to perform top-down decoding. The method is competitive with the state-of-the-art while being faster.

Citation: Thanh-Tung Nguyen, Xuan-Phi Nguyen, Shafiq Joty & Xiaoli Li (2020). Efficient Constituency Parsing by Pointing. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics.

Paper Link: https://www.aclweb.org/anthology/2020.acl-main.301/

International Conference on Learning Representations (ICLR), 2020

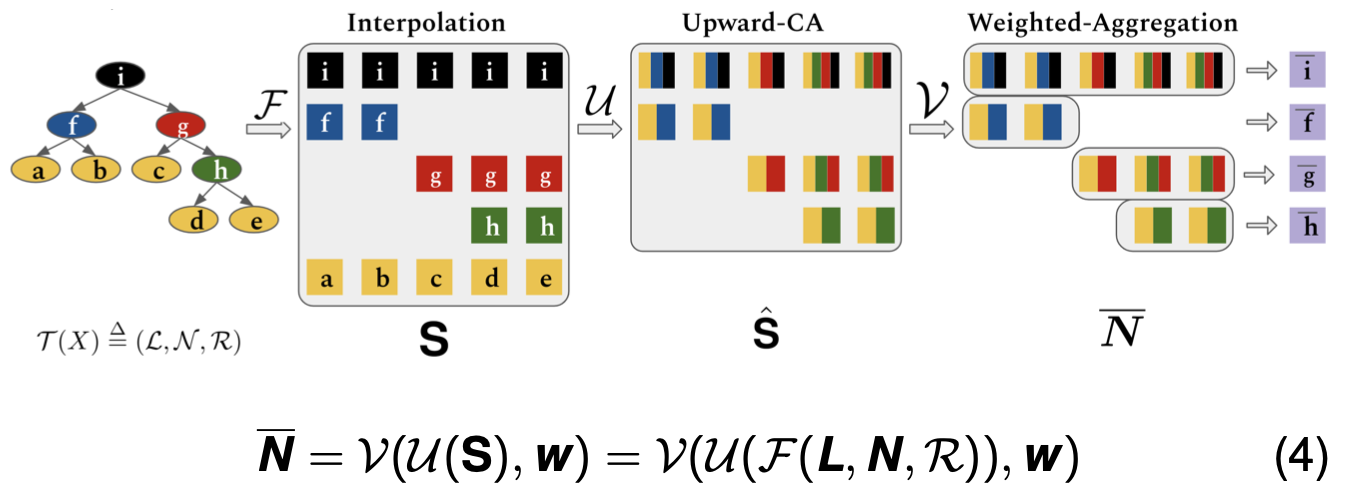

A novel attention mechanism that aggregates hierarchical structures to encode constituency trees for downstream tasks.

Citation: Xuan-Phi Nguyen, Shafiq Joty, Steven Hoi, & Richard Socher (2020). Tree-Structured Attention with Hierarchical Accumulation. In International Conference on Learning Representations.

Paper Link: https://arxiv.org/abs/2002.08046

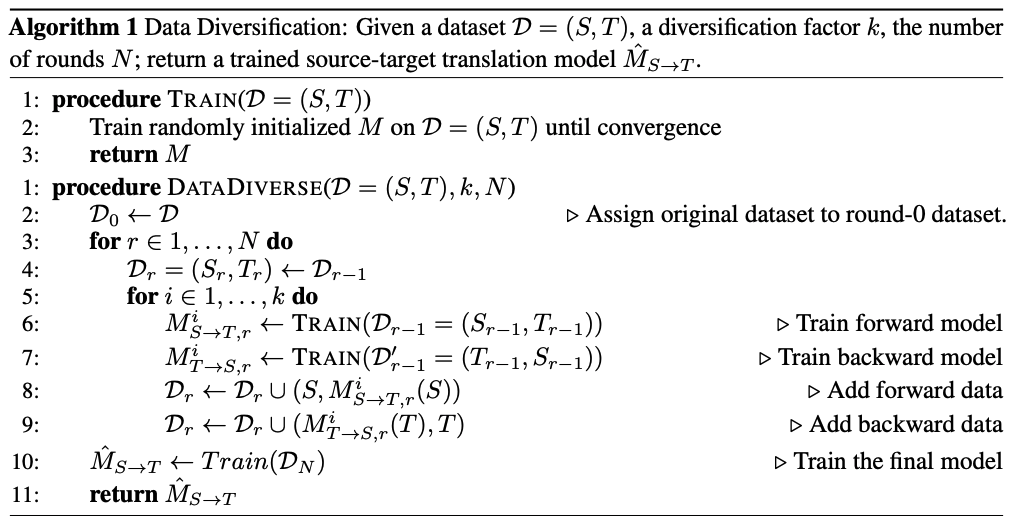

34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada, 2020

A simple way to boost many NMT tasks by using multiple backward and forward models.

Citation: Xuan-Phi Nguyen, Shafiq Joty, Wu Kui, & Ai Ti Aw (2019). Data Diversification: An Elegant Strategy For Neural Machine Translation. In the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada, 2020.

Paper Link: https://arxiv.org/abs/1911.01986

Proceedings of the North American Chapter of the Association for Computational Linguistics (NAACL), 2021

A novel top-down end-to-end formulation of document level discourse parsing in the Rhetorical Structure Theory (RST) framework.

Citation: Thanh-Tung Nguyen, Xuan-Phi Nguyen, Shafiq Joty & Xiaoli Li (2021). In Proceedings of the North American Chapter of the Association for Computational Linguistics (NAACL 2021).

Paper Link: https://www.aclweb.org/anthology/2020.acl-main.589/

ACL 2021 - The 59th Annual Meeting of the Association for Computational Linguistics, 2021

A Seq2Seq parsing framework that casts constituency parsing problems into a series of conditional splitting decisions.

Citation: Thanh-Tung Nguyen, Xuan-Phi Nguyen, Shafiq Joty & Xiaoli Li (2021). A Conditional Splitting Framework for Efficient Constituency Parsing. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics.

Paper Link: not-ready-yet

38th International Conference on Machine Learning (ICML), 2021

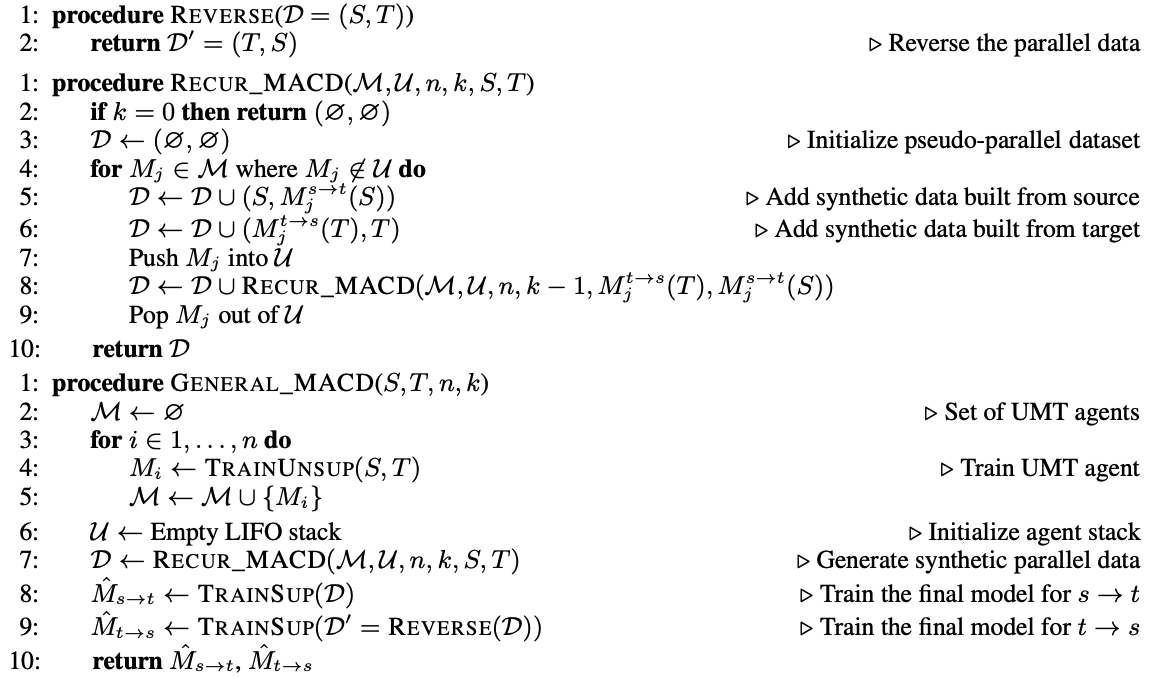

A novel strategy to improve unsupervised MT by using back-translation with multiple models.

Citation: Xuan-Phi Nguyen, Shafiq Joty, Thanh-Tung Nguyen, Wu Kui, & Ai Ti Aw (2021). Cross-model Back-translated Distillation for Unsupervised Machine Translation. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021).

Paper Link: https://arxiv.org/abs/2006.02163

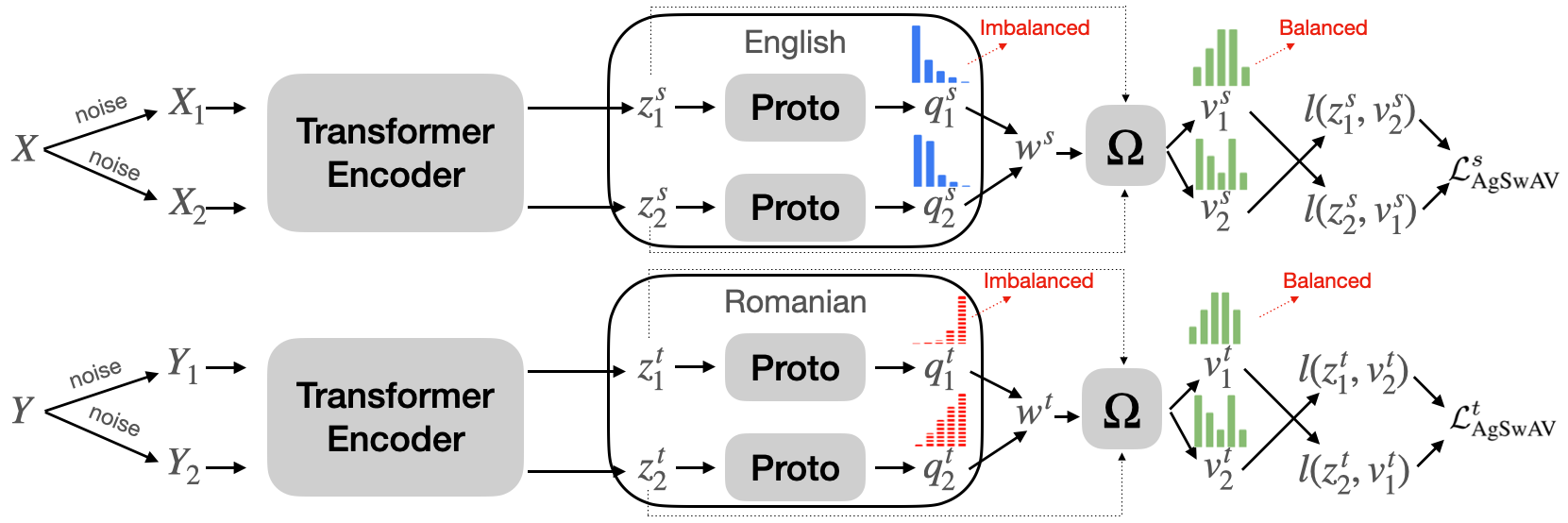

International Conference on Learning Representations (ICLR-22) 2022, 2022

Fully unsupervised mining method that can built synthetic parallel data for unsupervised machine translation

Citation: Xuan-Phi Nguyen, Hongyu Gong, Yun Tang, Changhan Wang, Philipp Koehn, and Shafiq Joty (2022). Contrastive Clustering to Mine Pseudo Parallel Data for Unsupervised Translation. In International Conference on Learning Representations (ICLR) 2022.

Paper Link: https://openreview.net/pdf?id=pN1JOdrSY9

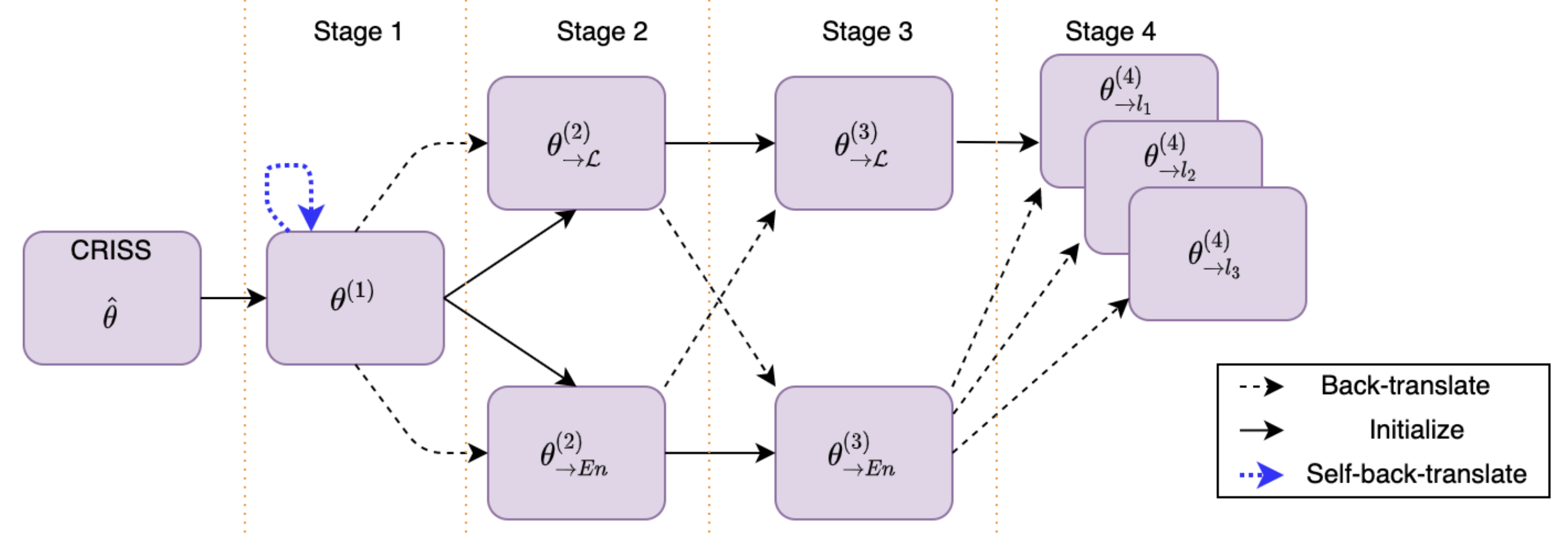

36th Conference on Neural Information Processing Systems (NeurIPS 2022), New Orleans, USA, 2022

Refining Low-Resource Unsupervised Translation by Language Disentanglement of Multilingual Model

Citation: Xuan-Phi Nguyen, Shafiq Joty, Wu Kui & Aw Ai Ti (2022). Refining Low-Resource Unsupervised Translation by Language Disentanglement of Multilingual Model. 36th Conference on Neural Information Processing Systems (NeurIPS 2022).

Paper Link: https://arxiv.org/abs/2205.15544

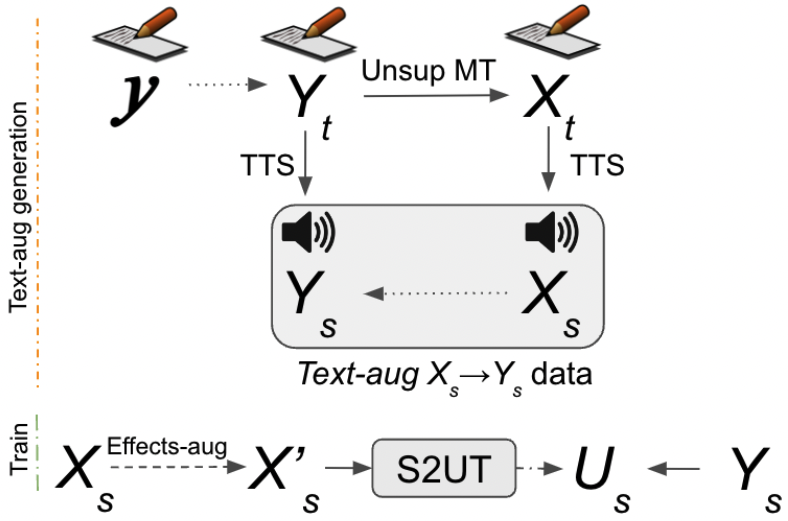

2023 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2023), Rhodes Island, Greece, 2023

Improving Speech-to-Speech Translation Through Unlabeled Text

Citation: Xuan-Phi Nguyen, Sravya Popuri, Changhan Wang, Yun Tang, Ilia Kulikov, Hongyu Gong (2023). Improving Speech-to-Speech Translation Through Unlabeled Text. 2023 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2023).

Paper Link: https://arxiv.org/abs/2210.14514

EMNLP 2023 - The 2023 Conference on Empirical Methods in Natural Language Processing, 2023

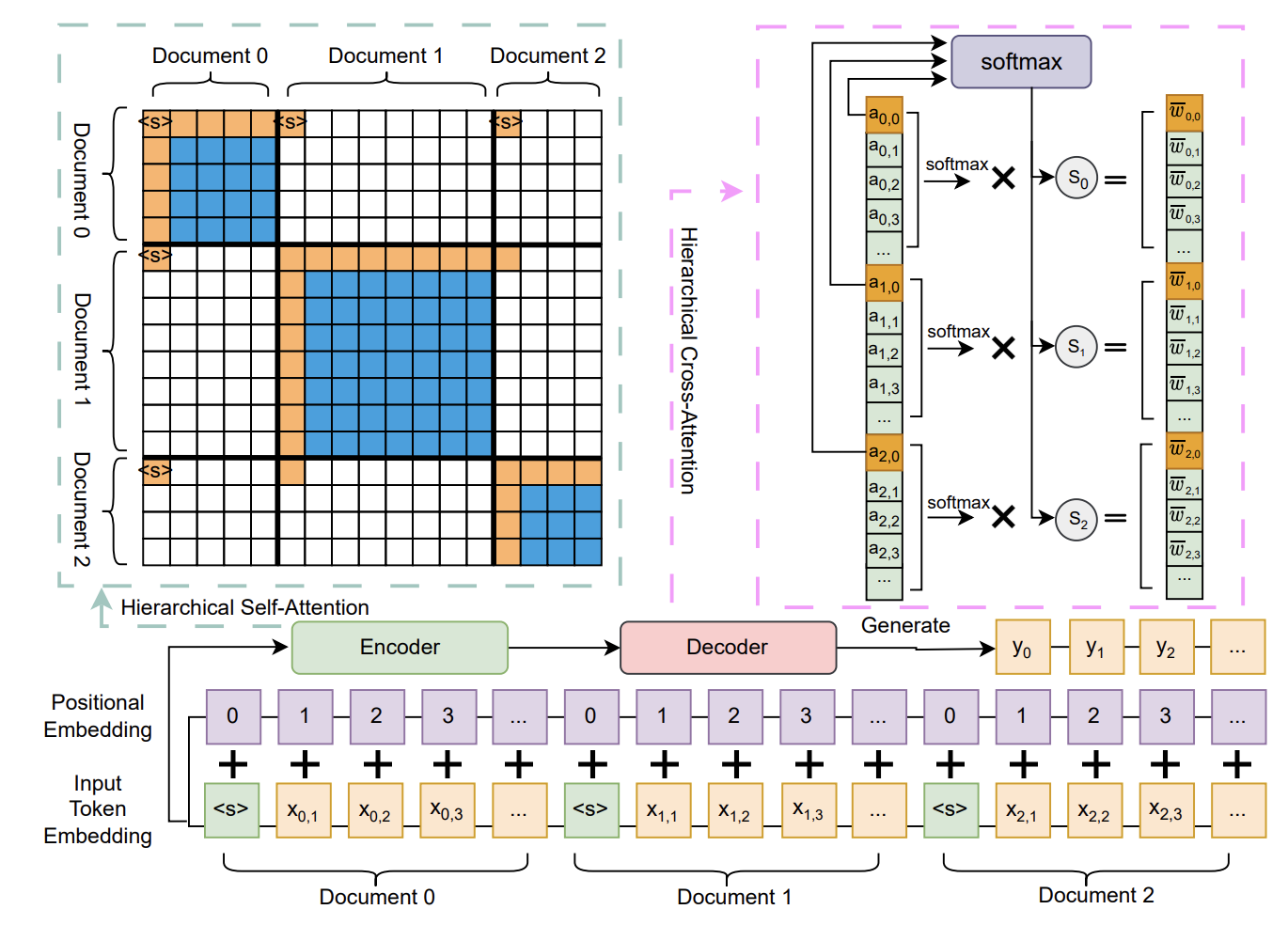

A hierarchical encoding-decoding scheme for abstractive multi-document summarization

Citation: Shen, Chenhui, Liying Cheng, Xuan-Phi Nguyen, Yang You, and Lidong Bing (2023). A hierarchical encoding-decoding scheme for abstractive multi-document summarization. EMNLP 2023 - The 2023 Conference on Empirical Methods in Natural Language Processing

Paper Link: https://arxiv.org/abs/2210.14514

EMNLP 2023 - The 2023 Conference on Empirical Methods in Natural Language Processing, 2023

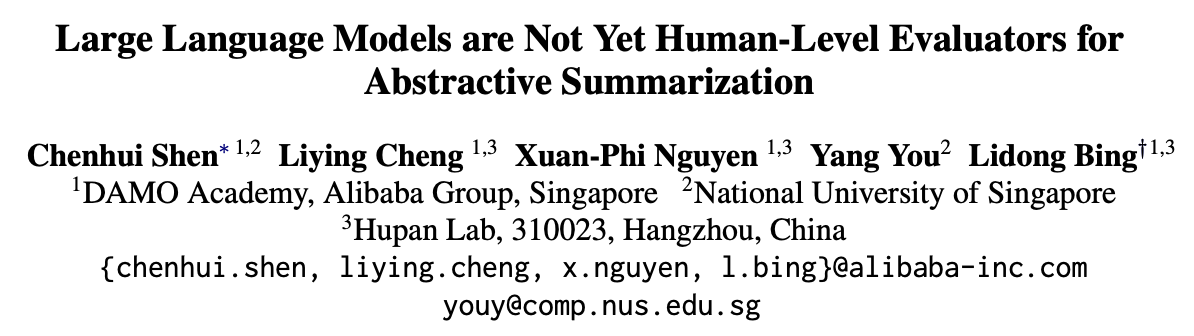

Large language models are not yet human-level evaluators for abstractive summarization

Citation: Shen, Chenhui, Liying Cheng, Xuan-Phi Nguyen, Yang You, and Lidong Bing (2023). Large language models are not yet human-level evaluators for abstractive summarization. EMNLP 2023 - The 2023 Conference on Empirical Methods in Natural Language Processing

Paper Link: https://arxiv.org/pdf/2305.13091

ACL 2024 - Proceedings of the Annual Meeting of the Association for Computational Linguistics, 2023

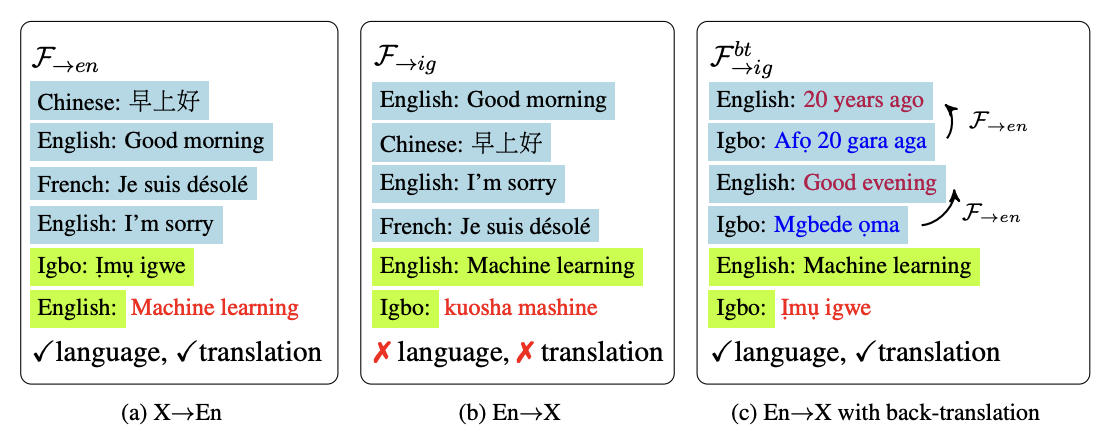

Democratizing LLMs for low-resource languages by leveraging their english dominant abilities with linguistically-diverse prompts

Citation: Xuan-Phi Nguyen, Sharifah Mahani Aljunied, Shafiq Joty, Lidong Bing (2024). Democratizing LLMs for low-resource languages by leveraging their english dominant abilities with linguistically-diverse prompts. ACL 2024 - Proceedings of the Annual Meeting of the Association for Computational Linguistics.

Paper Link: https://arxiv.org/pdf/2306.11372

ACL 2024 (DEMO) - Proceedings of the Annual Meeting of the Association for Computational Linguistics, 2024

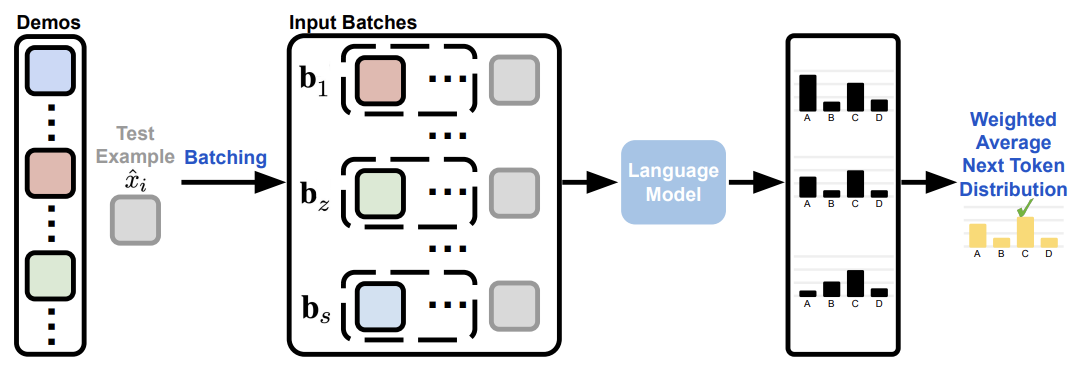

ParaICL: Towards Robust Parallel In-Context Learning

Citation: Xingxuan Li, Xuan-Phi Nguyen, Shafiq Joty, Lidong Bing (2024). ParaICL: Towards Robust Parallel In-Context Learning. Arxiv Preprint.

Paper Link: https://arxiv.org/abs/2404.00570

ACL 2024 (DEMO) - Proceedings of the Annual Meeting of the Association for Computational Linguistics, 2024

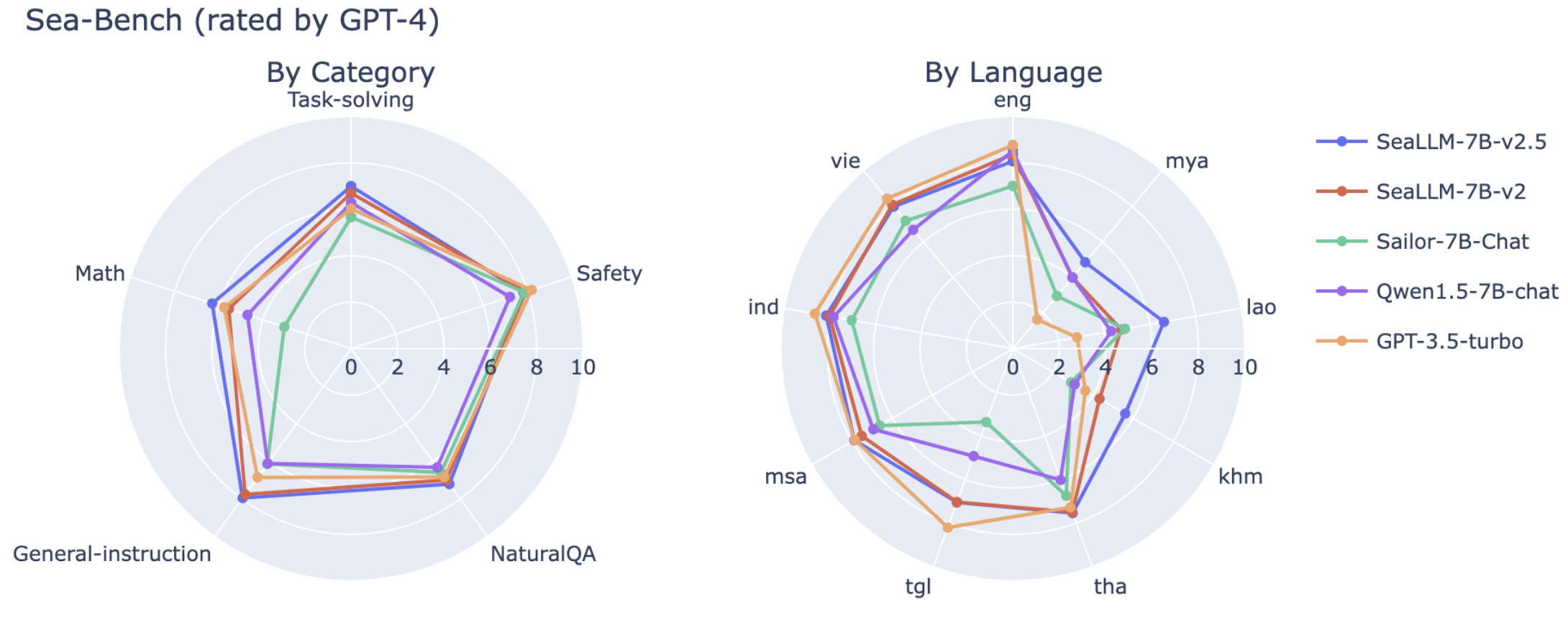

SeaLLMs - Large Language Models for Southeast Asia

Citation: Xuan-Phi Nguyen, Wenxuan Zhang, Xin Li, Mahani Aljunied, Qingyu Tan, Liying Cheng, Guanzheng Chen, Yue Deng, Sen Yang, Chaoqun Liu, Hang Zhang, Lidong Bing (2024). SeaLLMs - Large Language Models for Southeast Asia. ACL 2024 - Proceedings of the Annual Meeting of the Association for Computational Linguistics.

Paper Link: https://arxiv.org/abs/2312.00738

Technical Report., 2024

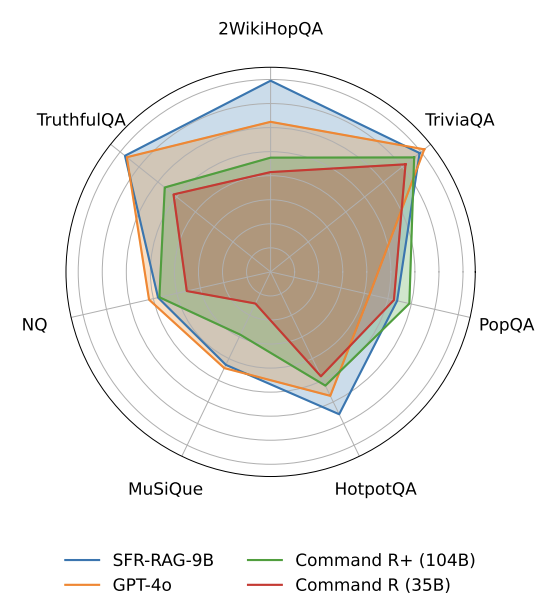

SFR-RAG: Towards Contextually Faithful LLMs

Citation: Xuan-Phi Nguyen, Shrey Pandit, Senthil Purushwalkam, Austin Xu, Hailin Chen, Yifei Ming, Zixuan Ke, Silvio Savarese, Caiming Xong, Shafiq Joty (2024). SFR-RAG: Towards Contextually Faithful LLMs. Arxiv Preprint - Technical report.

Paper Link: https://arxiv.org/abs/2409.09916

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.